Hardening the ElastiCache Benchmark: Observable Lifecycle & Durable S3 Exports

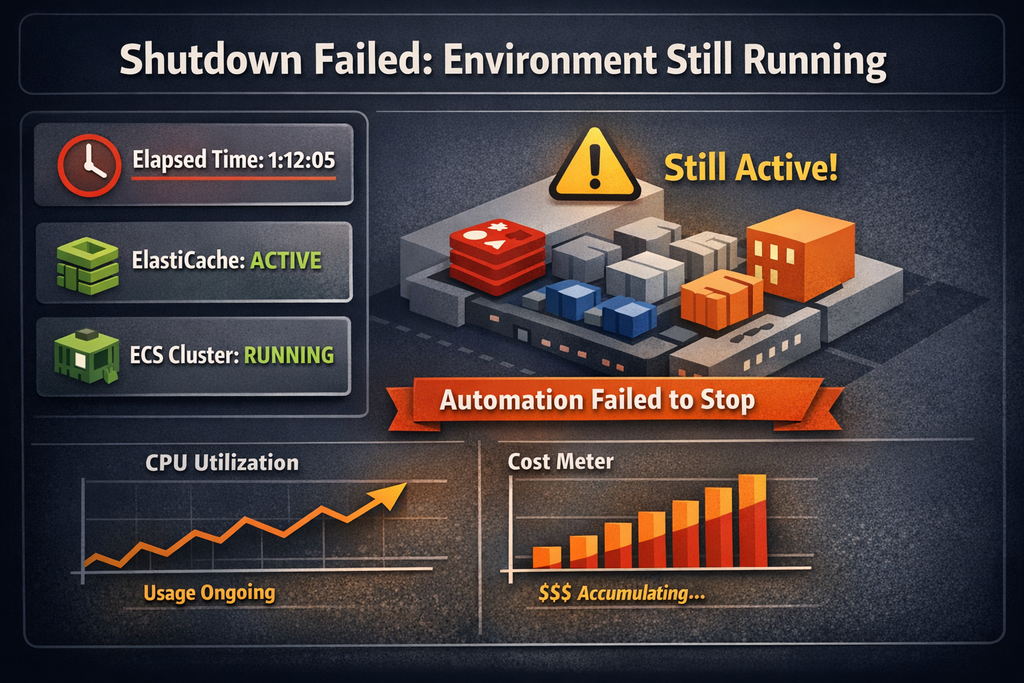

AWS ElastiCache Lab (built on Amazon ElastiCache) is a repeatable performance harness for comparing cache configurations under controlled load. Each run is time-boxed, produces exportable artifacts, and tears down deterministically to keep both cost and comparability under control.

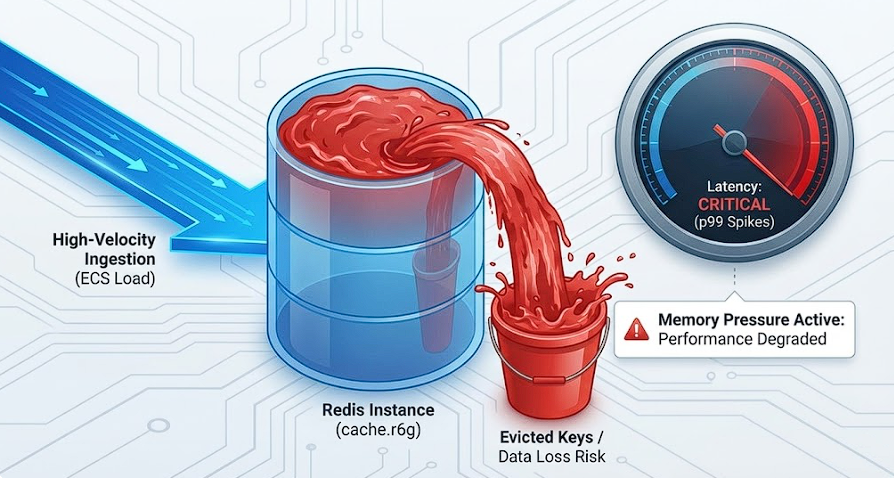

As the harness scales up (more Amazon ECS tasks, higher memory fill rate), the bottleneck often moves away from ElastiCache itself and toward the lifecycle boundary: shutdown, exports, and verification. The engine can change (Redis today, Valkey next), the boundary and evidence pipeline should not.